A network administrator needs to monitor network and services as a whole, across multiple use cases, domains, and technologies. For example, a cloud-native video application, running in the VMs/containers on specific redundant servers, streaming over multicast across the country involves multiple (most of the time siloed) domains & competencies: application, cloud, server, and networking … where networking could be subdivided into IP, optical, mobile domains.

The issue is that, with different technology domains and different protocols, come different data models. In order to assure cross domain use cases, the network management system and network operators must integrate all the technologies, protocols, and therefore data models. In other words, it must perform the difficult and time-consuming job of integrating & mapping information from different data models. Indeed, in some situations, there exist different ways to model the same type of information.

This problem is compounded by a large, disparate set of data sources: MIB modules for monitoring, YANG models [RFC7950] for configuration and monitoring, IPFIX information elements [RFC7011] for flow information, syslog plain text [RFC3164] for fault management, TACACS+ [RFC8907] or RADIUS [RFC2865] in the AAA (Authorization, Authentication, Accounting) world, BGP FlowSpec [RFC5575] for BGP filter, Openflow for control plane, etc… or even simply the router CLI for router management.

Some networking operators still manage the configuration with CLI while they monitor the operational states with SNMP/MIBs. How difficult is that in terms of correlating information? Some others moved to NETCONF/YANG for configuration but still need to transition from SNMP/MIBs to Model-driven Telemetry … to take advantage of having a single data model for both config and operational data.

When network operators deal with multiple data models, the task of mapping the different models is time-consuming, hence expensive, and difficult to automate. This information is certainly not new. Actually, I’ve been stressing it for more than 10 years, if not 15 …, but since I have to repeat it on regular basis, here is a blog about it.

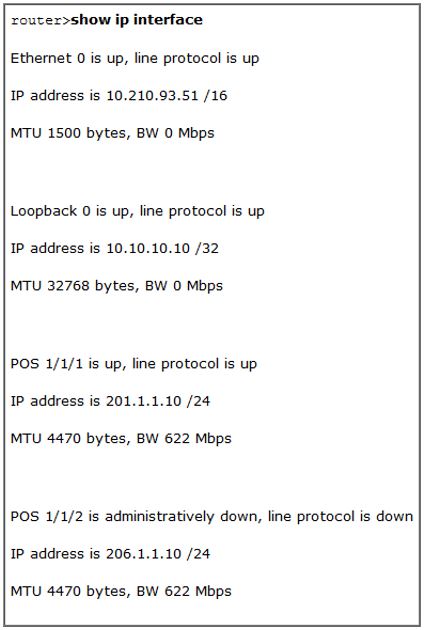

To make it crystal clear, let’s illustrate this with a very simple and well known networking concept: a simple interface. Let’s start with a simple CLI command: “show ip interface” for basic interface information.

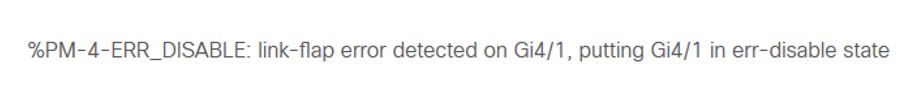

And below is a plain English syslog message… which is barely actionable because there is no standard for naming interface in routers: Gi4/1 or Giga4/1 or GE4/1 or Gigabit4/1 or GigabitEthernet4/1 or something else? Well, it depends on the vendor, platform, and OS version!

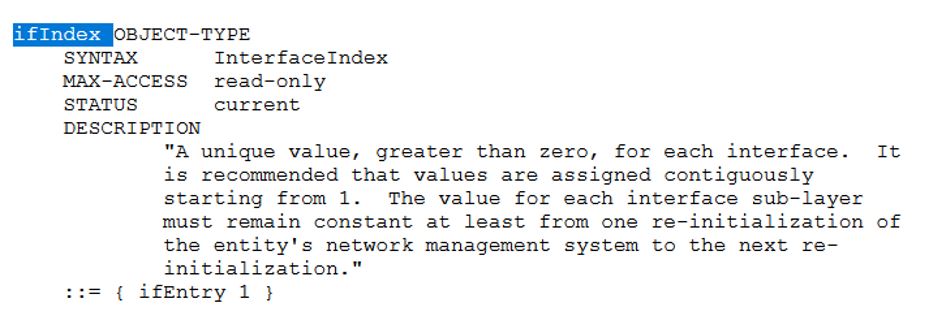

Now, if you have been monitoring networks with SNMP, I’m sure you know about the ifIndex, a unique interface identifier for interface. EVERY Network Management System (NMS) starts by polling ALL router interfaces stats for 5 minutes. Why? Because it believes it has to :-(. Btw, just for the fun, execute a debug SNMP packets on your router, understand how many NMS’es actually poll the ifTable, and share the number. But I’m digressing…

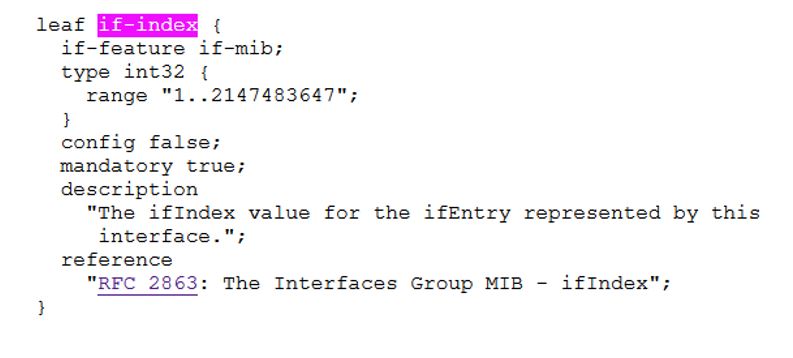

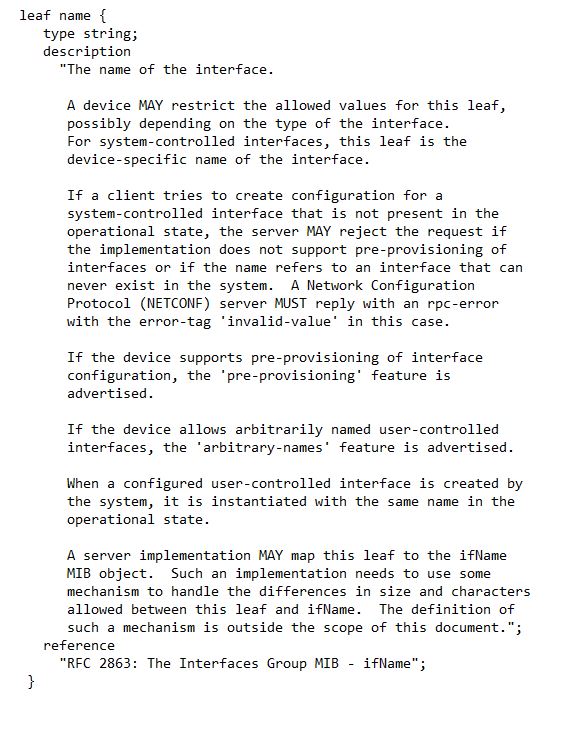

Dealing with YANG, leaf name takes care of the interface definition. Thanks to the MIB and YANG contributors to the IETF, at least the interface concepts in those two data models rely on the same ifIndex concept.

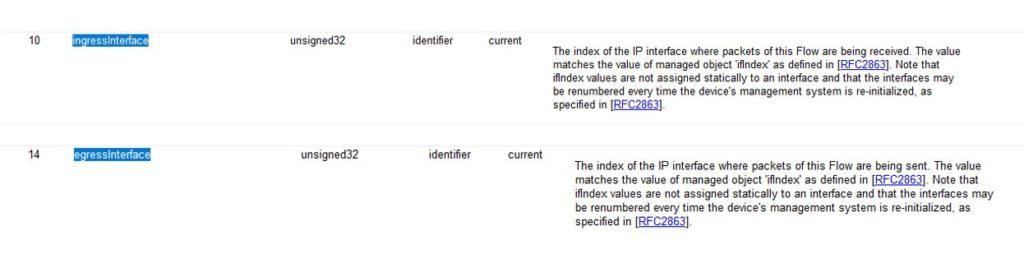

Now, let’s look at the IPFIX model, which was developed at the IETF based on NetFlow version 9. Even if the ingressInterface and egressInterface report the famous ifIndex values within the flow record, the interface semantic changed: we have one specific field for the ingress traffic and another one for the egress one. The MIB object ifIndex doesn’t make that distinction. While it’s not difficult to map the IPFIX interface information elements with the MIB and YANG interface ones, the different semantic must be hardcoded in the NMS.

At least, BGP FlowSpec is a pure networking protocol, so its interface definition should surely reuse the well known ifIndex, or at least the IPFIX Information Elements, right? Well, no … that would be too easy! Below is the interface types for BGP FlowSpec

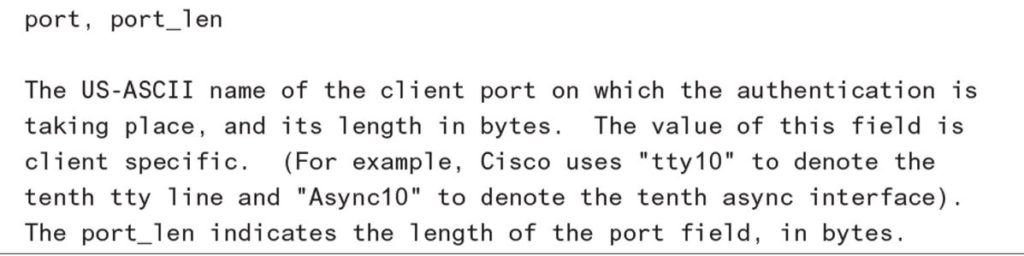

Now, to take protocols from the AAA world, here are examples of TACACS+ and RADIUS interfaces, or “ports” to use the right terminology. Those have nothing to do with the networking ifIndex definitions, even if it’s perfectly fine to host TACACS+ or RADIUS in routers.

I could multiply the examples (for example how many IP addresses definitions do we have in different data models?), but I’m sure you understand my point. What protocol designers have to understand is that one day, there will be an network management/automation cross domain use case that will require the integration and the potentially mapping of those different data models. While a prototype is easy to hardcode for demonstration, implementing a full mediation function is costly, inefficient, and hard to maintain. So I get upset when I see new completely independent data models. I wish the authors would understand the long-term consequences.

To get rid of the CLI, the networking industry converged on YANG for configuration. And there is hope with the networking industry convergence of (YANG) model-driven telemetry to stream the service operational data. At least, that would help reducing the number of data models… and hence simplify the (cost of) automation and analytics! Isn’t it what we should all want?

More information: Host Speaker Series: Telemetry – Industry Status, Challenges, and IETF Opportunities – YouTube